Algorithmic gamblification of work | The workers behind the 'AI magic' | New Airbnb regulation

Good day! One minute you get a newsletter in your mailbox every week and the next you have to wait 3 weeks for it. As someone who loves surprises, I go for option 2 ;-)

Last weeks, I again participated in many events related to platforms in different roles, had the opportunity to contribute to great projects and work with professionals on almost all continents on this globe. How cool are the opportunities to work location-independent with tools to operate in international teams in an accessible way. Although, of course, it's not about the tools, but how you collaborate and use the tools. Just as with platforms, (online) tools are facilitating and not leading. Should you think: with me it is the other way round, I would have a good discussion with yourself.

And although a lot is possible online and remote, it is also important to continue travelling (as responsibly as possible) and to speak to each other live. So that is the reason I 'briefly' took the train up and down to Munich in Germany this week for an interview with the person in charge of the Crowdsourcing Code: a code of conduct between 8 'crowdwork' platforms in Germany. I did this for the WageIndicator's new podcast: a podcast (and blog) on 'global gig economy issues'. The first edition of this monthly podcast will go live in mid-April.

Enough introduction: for this edition I have again collected a number of relevant pieces for you and provided them with my interpretation and commentary. Enjoy the read and have a nice day!

The house always wins: the algorithmic gamblification of work | Veena Dubal

The impact of algorithms and technology on the worker: the subject of part two of the European Platform Work Directive. For platforms offering 'on demand' jobs (taxi and delivery), the impact of the algorithm on finding, hiring and performing work is great and the worker is paid per job. Where it is often unclear exactly what the returns are.

In the early days of Uber, everyone was excited about the 'surge pricing' the company uses. If there is more demand than supply somewhere at a given time, prices rise. With this, demand goes down and supply goes up. At the time, many saw this as a perfect economic model of flexible pricing. The example of the hairdresser was often brought to mind: why do you pay the same for a haircut on Tuesday afternoon as on Friday evening, when demand is many times higher? Now it appears (and this is not overnight) that these 'smart' (or: 'savvy') technologies are able to root for and entice working people to do more than initially envisaged.

In the article "The house always wins: the algorithmic gamblification of work", scientist Veena Dubal gives an interesting (and shocking) insight into the algorithms used by Uber to direct workers to be available as much as possible at the platform's convenience. This is also because the risk of not working is at the worker's expense: something that, in my opinion, is a very bad idea anyway.

In this article:

“In a new article, I draw on a multi-year, first-of-its-kind ethnographic study of organizing on-demand workers to examine these dramatic changes in wage calculation, coordination, and distribution: the use of granular data to produce unpredictable, variable, and personalized pay. Rooted in worker on-the-job experiences, I construct a novel framework to understand the ascent of digitalized variable pay practices, or the transferal of price discrimination from the consumer to the labor context, what I identify as algorithmic wage discrimination. As a wage-setting technique, algorithmic wage discrimination encompasses not only digitalized payment for work completed, but critically, digitalized decisions to allocate work and judge worker behavior, which are significant determinants of firm control.

Though firms have relied upon performance-based variable pay for some time, my research in the on-demand ride hail industry suggests that algorithmic wage discrimination raises a new and distinctive set of concerns. In contrast to more traditional forms of variable pay like commissions, algorithmic wage discrimination arises from (and functions akin to) to the practice of consumer price discrimination, in which individual consumers are charged as much as a firm determines they are willing to pay.

As a labor management practice, algorithmic wage discrimination allows firms to personalize and differentiate wages for workers in ways unknown to them, paying them to behave in ways that the firm desires, perhaps for as little as the system determines that they may be willing to accept. Given the information asymmetry between workers and the firm, companies can calculate the exact wage rates necessary to incentivize desired behaviors, while workers can only guess as to why they make what they do.”

You don't have to be an activist to understand that such techniques are far from desirable. This piece includes the experiences of some drivers:

“Domingo, the longtime driver whose experience began this post, felt like over time, he was being tricked into working longer and longer, for less and less. As he saw it, Uber was not keeping its side of the bargain. He had worked hard to reach his quest and attain his $100 bonus, but he found that the algorithm was using that fact against him.”

I think it is important to let platforms take more responsibility in explaining their processes and having this validated by a trusted third party. The fact that platforms like Uber frame complexity as an added value for the worker is evident from this quote:

“If you joined Uber years ago, you will have joined when prices were quite simple. We set prices based on time and distance and then surge helped increase the price when demand was highest. Uber has come a long way since then, and we now have advanced technology that uses years of data and learning to find a competitive price for the time of day, location and distance of the trip.”

When someone takes pride in adding complexity, it should lead to suspicion by default. Because complexity can also be used to hide things. I wonder if upcoming European regulations will lead to less complexity and unclear processes for the worker. It should be a key issue for policymakers anyway.

Video course: Build a successful marketplace | Sharetribe

Starting your own platform: where do you start and what is the route to take? That's a question you can safely leave to the team at Sharetribe. Sharetribe offers a simple and straightforward tool for putting together your own 'marketplace' without any expertise in programming. I have known the company myself for about ten years, and even with the 'build your own platform' programme at the The Hague University of Applied Sciences, students with no prior knowledge easily built their own platform via Sharetribe.

Sharetribe invests a lot in content to help their clients successfully launch their own platform. They also have a stake in this: they only make money when their customers are successful. This has resulted in an impressive collection of valuable content. Last week, they added something new to this: an online video course:

This ten-step video course takes you through your marketplace journey all the way from idea to scaling your business. Each step is packed with the latest marketplace facts, actionable advice, and relevant case studies.

In an hour and a half, you will learn a nice foundation of the steps you need to go through to launch a successful platform.

Exclusive: OpenAI Used Kenyan Workers on Less Than $2 Per Hour to Make ChatGPT Less Toxic | Time

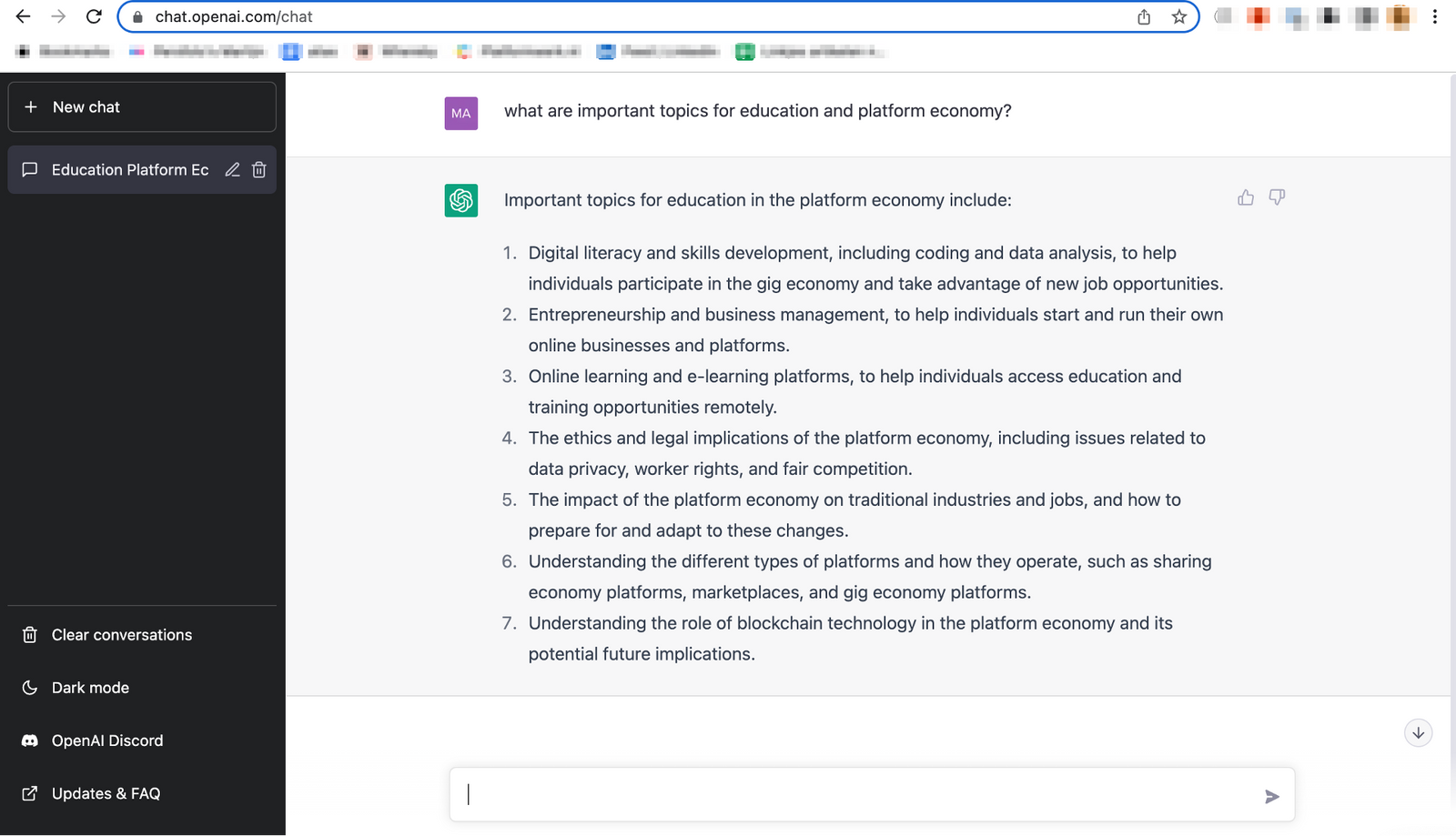

It is almost inevitable that you have seen OpenAI's insane tool ChatGPT pass by or possibly tried it yourself. An impressive chatbot that you can ask any question, only to get a comprehensive and detailed answer. Many sectors, including education, are anxiously considering what to do with such a tool. I have tried the tool myself and it is really impressive. For instance, before the workshop on platform economy and education, I asked what are important topics for education and platform economy. A rather general and vague question. You can see the result in the image at the bottom of this piece.

ChatGPT is yet another development that, as with AI and algorithms, seems like a kind of magic black box. It almost seems like magic: everything happens by itself. But.... is that really the case? Certainly not. Both in training and execution, there are always loose ends. For instance, many tech companies use platforms like Amazon Mechanical Turk: a platform where people all over the world (and especially pieces of the world where you can get by on very little income and where people have little alternative) perform mini jobs of a few seconds via a platform: so-called 'clickwork'. This involves recognising images, but also resolving loose ends of seemingly automatic systems. Content moderation of platforms like Facebook is also designed according to these principles. Not always pure platform, but similar principles.

Mary Gray wrote a fascinating book on so-called clickwork: "Ghost Work - How to stop Silocon Valley from building a new global underclass". Timm O'Reily wrote the following about this book: "The Wachowskis got it wrong. Humans aren't batteries for The Matrix, we are computer chips. In this fascinating book, Gray and Suri show us just how integral human online task workers are to the development of AI and the seamless operation of all the great internet services. Essential reading for anyone who wants to understand our technology-infused future." Highly recommended.

A long run-up to the piece I want to discuss today: "OpenAI Used Kenyan Workers on Less Than $2 Per Hour to Make ChatGPT Less Toxic." It describes how OpenAI managed to 'magically' make ChatGPT better and safer:

"To build that safety system, OpenAI took a leaf out of the playbook of social media companies like Facebook, who had already shown it was possible to build AIs that could detect toxic language like hate speech to help remove it from their platforms. The premise was simple: feed an AI with labeled examples of violence, hate speech, and sexual abuse, and that tool could learn to detect those forms of toxicity in the wild. That detector would be built into ChatGPT to check whether it was echoing the toxicity of its training data, and filter it out before it ever reached the user. It could also help scrub toxic text from the training datasets of future AI models.

To get those labels, OpenAI sent tens of thousands of snippets of text to an outsourcing firm in Kenya, beginning in November 2021. Much of that text appeared to have been pulled from the darkest recesses of the internet. Some of it described situations in graphic detail like child sexual abuse, bestiality, murder, suicide, torture, self harm, and incest."

OpenAI considers this work very important: "Classifying and filtering harmful [text and images] is a necessary step in minimising the amount of violent and sexual content included in training data and creating tools that can detect harmful content." The article's authors are rightly critical after their research: "But the working conditions of data labelers reveal a darker part of that picture: that for all its glamour, AI often relies on hidden human labor in the Global South that can often be damaging and exploitative. These invisible workers remain on the margins even as their work contributes to billion-dollar industries."

ChatGPT remains an impressive tool, but surely the magic is a lot less (clean) than the tech optimists try to make us believe. Andrew Strait describes it powerfully in the piece: "They're impressive, but ChatGPT and other generative models are not magic - they rely on massive supply chains of human labour and scraped data, much of which is unattributed and used without consent"

What can we learn from this?

While the insights from this story alone are interesting on their own, I think it's important to look further. What can we learn from this case study.

For one thing, it shows that these kinds of tools feast on the work of others: scrapping content created by others and low-paid moderators and workers. It is the bright minds who devise and build systems to do this and get away with the credit and money, but I think it is important to (re)recognise more that this content does not fall from the sky and there may be a necessary discussion about how fair and desirable this is.

I would also like to broaden that discussion a bit. I regularly speak to very committed scientists with a clear opinion about what is 'fair' who meanwhile use Amazon Mechanical Turk for their research. I understand that this is incredibly convenient, but then of course you also have butter on your head. A good conversation about fair treatment and remuneration of everyone in the chain is something that is missing from many innovations. A conversation that, as far as I am concerned, could be had more often. People who perform clickwork are a kind of 'disposable labour'. The moment they are no longer needed, no one will care. And because of this, it is only right that the authors of Ghostwork and of this article point out the facts to us.

NEW AIRBNB LEGISLATION MAKES ENFORCEMENT RULES MUCH EASIER

Airbnb and regulation: it is an issue that has been around for quite a few years. Earlier, national regulations were introduced in the Netherlands and now this is being extended to European regulations. I think a good step for everyone.

The regulations will also be accompanied by a European tool: "The Commission is coming up with a single European data tool for exchanging information on holiday rentals between platforms and local authorities. Platforms will now have to share, in places where rules apply, data every month on how many nights a house or flat has been rented out and to how many people."

Ultimately, each city will continue to set its own holiday rental rules. That too is a good step, although Amsterdam's case study teaches us that it is not as simple as it seems.

It would also be good to secure the knowledge and research in a central location alongside this tool, so that not every city has to reinvent the wheel itself. Furthermore, I am (very) curious about the implementation of this. Connecting the platform and the central European tool: that's probably fine. But what about the translation to the individual systems of the municipalities (a link with the land registry in the Netherlands, for instance, seems light years away, and we are a country in the digital vanguard...) and what are the privacy risks involved? I will keep following this.

About and contact

What impact does the platform economy have on people, organisations and society? My fascination with this phenomenon started in 2012. Since then, I have been seeking answers by engaging in conversation with all stakeholders involved, conducting research and participating in the public debate. I always do so out of wonder, curiosity and my independent role as a professional outsider.

I share my insights through my Dutch and English newsletters, presentations and contributions in (international) media and academic literature. I also wrote several books on the topic and am founder of GigCV, a new standard to give platform workers access to their own data. Besides all my own projects and explorations, I am also a member of the 'gig team' of the WageIndicator Foundation and am part of the knowledge group of the Platform Economy research group at The Hague University of Applied Science.

Need inspiration and advice or research on issues surrounding the platform economy? Or looking for a speaker on the platform economy for an online or offline event? Feel free to contact me via a reply to this newsletter, via email (martijn@collaborative-economy.com) or phone (0031650244596).

Also visit my YouTube channel with over 300 interviews about the platform economy and my personal website where I regularly share blogs about the platform economy. Interested in my photos? Then check out my photo page.